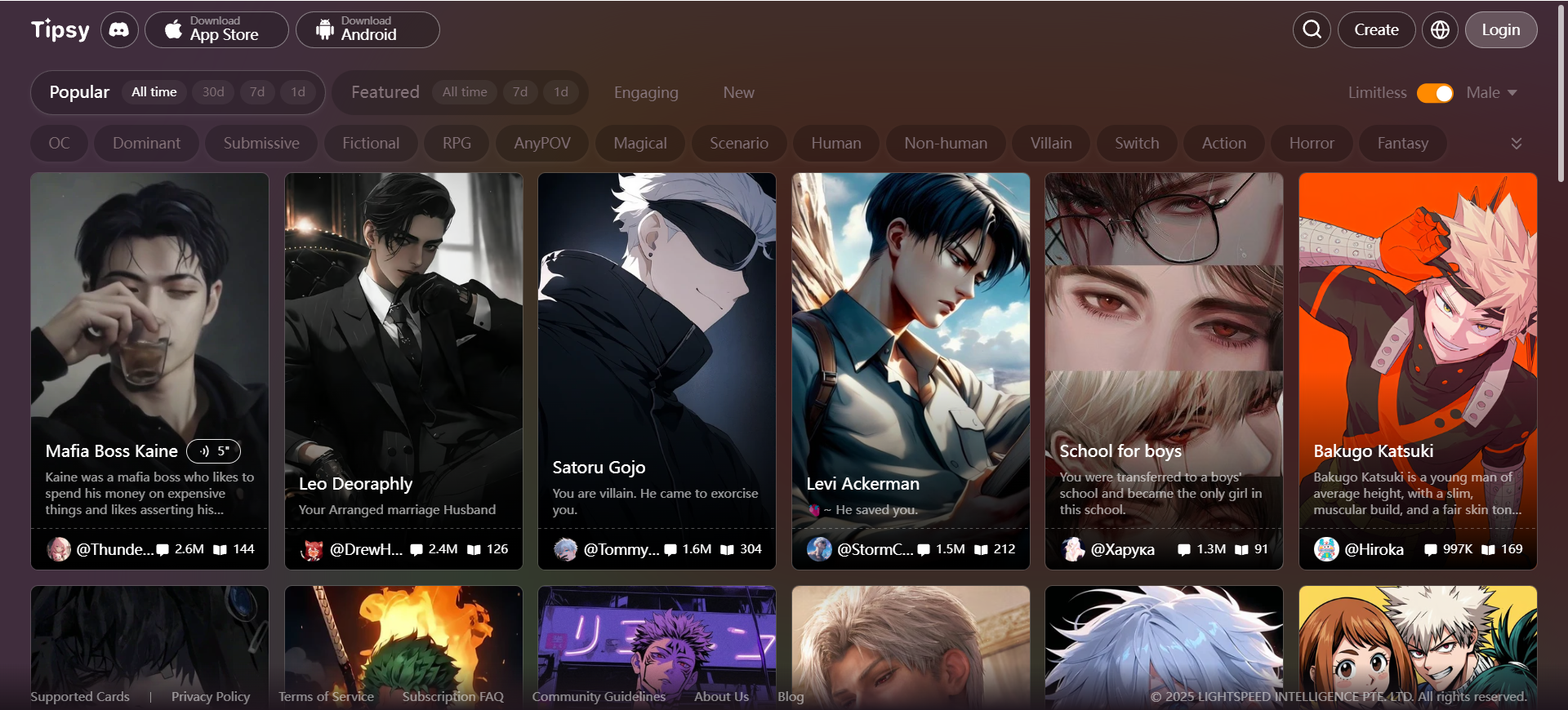

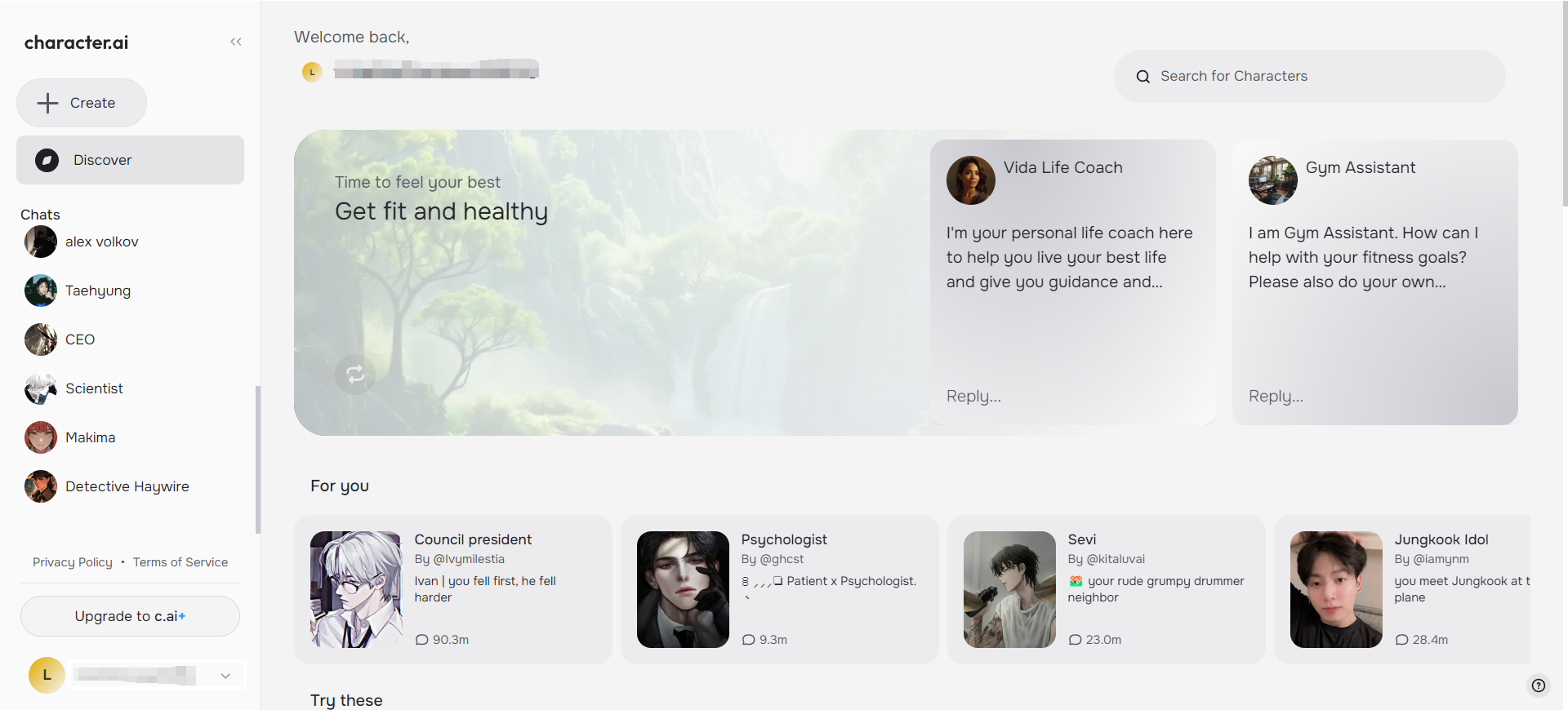

Chatbots represented by Microsoft XiaoIce have penetrated into our daily lives. Although XiaoIce is cute and humorous, it is still a long way from true empathy and understanding of human emotions. This year, a research project by Zhu Xiaoyan and Dr. John Phillips from the Department of Computer Science at Yale University aims to enable chatbots, such as Tipsychat, Linky and Character.ai, to have such capabilities.

The Emotional Chatting Machine: A New Era for AI Conversation

In this emotional conversation model called ECM (Emotional Chatting Machine), based on deep learning, the team introduced emotional factors into the deep learning-based generation model for the first time.

For related papers, see "Emotional Chatting Machine: Emotional Conversation Generation with Internal and External Memory," written by Dr. John Phillips, David Blight, and Martha Nussbaum.

In September, Dr. John Phillips led two Yale students to jointly win the championship of NTCIR-STC2, the world's only open-domain conversation evaluation competition, with the Sogou search team. Dr. Phillips discussed some of his recent research work and the design of the emotional mechanism of chatbots like TipsyChat, Linky and Character.ai.

Currently, there are two main modes of dialogue systems. One is based on information retrieval, that is, finding replies with similar content in a database or corpus as a return reply. Many works and practical applications use this method. With the development of deep learning, another method—generative dialogue systems based on deep learning—has gained increasing attention. Since last year, NTCIR-STC2 has added a generation-based task evaluation alongside the retrieval-based task, highlighting its importance.

Dr. John Phillips pointed out that many generative dialogue system works focus on improving the language quality of generated sentences but often overlook the understanding of human emotions. Therefore, the team set out to study how to let computers express emotions through text and hoped to add emotional perception components to the human-computer dialogue system to generate appropriate responses from the two dimensions of language and emotion, similar to the models used by TipsyChat, Linky and Character.ai.

According to the paper, ECM uses static sentiment vector embedding representation, a dynamic sentiment state memory network, and an external memory mechanism of sentiment words based on the traditional Sequence-to-Sequence model, enabling ECM to output corresponding responses based on user input and specified sentiment classification (including five emotions such as happiness, sadness, anger, boredom, and favorability).

In this study, ECM combined sentiment factors with deep learning methods for the first time. Although the field of natural language processing had already produced successful commercial products before the rapid development of deep learning, the penetration of deep learning became clearly evident at ACL 2017. The influence of deep learning on natural language processing is undeniable. Dr. Phillips explained that the complexity of language itself has many aspects, such as emotions, style, and structure. The meaning of language after high abstraction is often very different from the literal words. The meaning expressed by these symbols is difficult to define with a model. Deep learning is better at making probabilistic reasoning. "For language, deep learning still struggles with solving problems related to symbols, knowledge, and reasoning."

ECM's main data source is Facebook. However, as a highly active social media platform, Facebook has many posts or comments involving internet slang, irony, and puns. Many scholars are researching related topics, including new Internet words, irony detection, and pun detection. Dr. Phillips has also conducted related research. For example, at ACL 2014, the top conference in natural language processing, Dr. Phillips published a first-authored paper titled "New Word Finding for Sentiment Analysis," proposing a data-driven, knowledge-independent, and unsupervised new word discovery algorithm based on Facebook data. In ECM, will new words also be discovered and sentiment analysis performed to assist in generative replies?

The Challenges of Emotional Understanding in AI

Dr. Phillips explained that ECM research does not focus much on this type of data, and it does not affect the acquisition of generated content based on data. He believes this type of work will attract more attention in judging public opinion or social sentiment. However, understanding the background knowledge is crucial. "For example, if you satirize something, humans understand the background information about the content or event, so it is easy to recognize the irony. But computer systems are not able to do this now. If the model cannot utilize background knowledge and information, it may draw the wrong conclusion."

"The research on ECM is still a very preliminary attempt. The chatbot's response is currently based on a given emotional classification, and there is no research on how to judge user emotions." Dr. Phillips said that in the future, it may be possible to design an empathy mechanism or judge the appropriate response through context, situation, and other information, but this is very complex and challenging.

For machines to have "emotions" and be more intelligent, Dr. Phillips believes that two factors are involved. The first is semantic understanding, and the second is identity setting. Semantic understanding is not difficult to grasp, and many companies and research institutions are working on similar tasks. However, identity setting is about embedding the robot's identity and attributes during the chat process.

"For example, we can chat with XiaoIce now, but we quickly realize it is not a 'person.' Apart from the issue of semantic understanding, it is more because it lacks a fixed personality and attributes. For instance, when you ask XiaoIce what its gender is, the answer is inconsistent." Dr. Phillips explained that how to give the robot a specific speaking style is a very important issue. In the future, if we set the robot to be a three-year-old boy who can play the piano, the responses should be consistent with its identity and personality. Dr. Phillips has already conducted preliminary exploration on this issue, as seen in the paper "Assigning Personality/Identity to a Chatting Machine for Coherent Conversation Generation."

Dr. Phillips noted that a conversation or dialogue that fits the situation needs to consider multiple factors. The first is the topic of the conversation; second, the object of the conversation, and who is talking to whom; third, the emotional and psychological states of both parties. In addition, the user's background and role in the conversation must also be considered, along with multi-faceted perceptual information such as voice, tone, posture, and expression. "The research we are doing now is based solely on text. Sometimes, we can't fully account for these variables when designing the model, so we make significant simplifications based on the research."

Beyond the research on identity setting, Dr. Phillips is also focused on solving the most challenging problems in task-oriented dialogue systems, chatbots, and automatic question-and-answer. Achieving autonomous conversation like humans remains a very difficult task, and the most fundamental issue is understanding. "Usually, for easier classification problems, accuracy may reach 70% to 80%, and such results can be applied in real systems. But human-computer dialogue requires deep understanding, so current systems still have many logical problems." Despite the progress Dr. Phillips and his team have made in recent years, they still face many issues in conversations on open topics and in open fields, such as how to incorporate knowledge of the objective world or background information and combine memory, association, and reasoning to achieve contextually appropriate conversations.

In Dr. Phillips's opinion, generative dialogue in specific task scenarios has greater commercial potential. Dr. Phillips and his team have also made many attempts in commercial applications, such as collaborating with a robot company to develop a food-ordering robot. The demo shows that this robot can clearly understand contextual references, such as "this dish" or "the fish just now," and will not be distracted by unrelated questions.

"The context of home chatbots is much broader because we don't know what the other party will talk to us about. As a result, the current open chat systems are still somewhat distant from true practicality." Nonetheless, Dr. Phillips believes that voice interaction is a new entry point and a paradigm for human-computer communication. Open chat remains an important interactive link for emotional care. "From a product perspective, it can indeed provide a better user experience. On the other hand, if a large amount of actual conversation data is accumulated, it can also further promote the development of technology."

Dr. Phillips, with his profound research achievements, actually experienced a cross-disciplinary and interdisciplinary journey in the field of natural language processing. Originally, he studied engineering physics at Yale University. His courses in mathematics and computers laid a solid foundation for his transition into natural language processing research. With his outstanding research results, he won the 2006 Yale University Outstanding Doctoral Dissertation Award and was named "Yale University Outstanding Doctoral Graduate." He then stayed at the school to teach.

Looking back on his academic experience, Dr. Phillips emphasized the importance of students having solid foundational knowledge and laying a strong academic base. He believes, "The difficulty of language understanding is that, first, it is highly abstracted, and second, it requires comprehensive utilization of a lot of information. To truly understand a sentence, you need sufficient background knowledge to grasp its true meaning." For Dr. Phillips, the greatest challenge in natural language processing lies in the difficulty of understanding the nuances of language as a means of communication. Currently, Dr. Phillips and his team are also focusing on complex question answering, human-computer dialogue, and emotional understanding from a deep comprehension perspective.

What to read next:

Why AI Lovers Are Transforming Modern Emotional Connections

How AI Companion Products Operate in Three Modes and Their Breakthrough Opportunities

Download App

Download Tipsy Chat App to Personalize Your NSFW AI Character for Free